Overview

IQBench is a novel benchmark developed to evaluate the fluid intelligence of Vision-Language Models (VLMs) using standardized visual IQ tests. It features:

- 500 human-curated questions

- 10 reasoning domains

- Sources: Images sourced from online repositories, textbooks, and educational content.

- Editing: Images were cleaned or regenerated to ensure quality and legality.

Unlike other benchmarks, IQBench emphasizes both prediction accuracy and interpretability of reasoning.

Tasks

- MDRT: Mechanical Deductive Reasoning Test

- DRTF: Deductive Reasoning Test with Figures

- 3D SPRT: 3D Spatial Deductive Reasoning Test

- VRTS: Verbal Reasoning Test with Syllogisms

- IVRT: Inductive Verbal Reasoning Test

- Num.: Numerical Reasoning

- FS: Figure Series

- NS: Number Series

- Ana5 / Ana3: Anagram Tests (5/3-letter)

Dataset Statistics

| Metric | Value |

|---|---|

| Total samples | 500 |

| Number of topics | 10 |

| Samples per topic | 50 |

| Multiple choice questions | 110 |

| Open-ended questions | 390 |

| Avg. question length | 27 words |

| Avg. reasoning pattern length | 48 words |

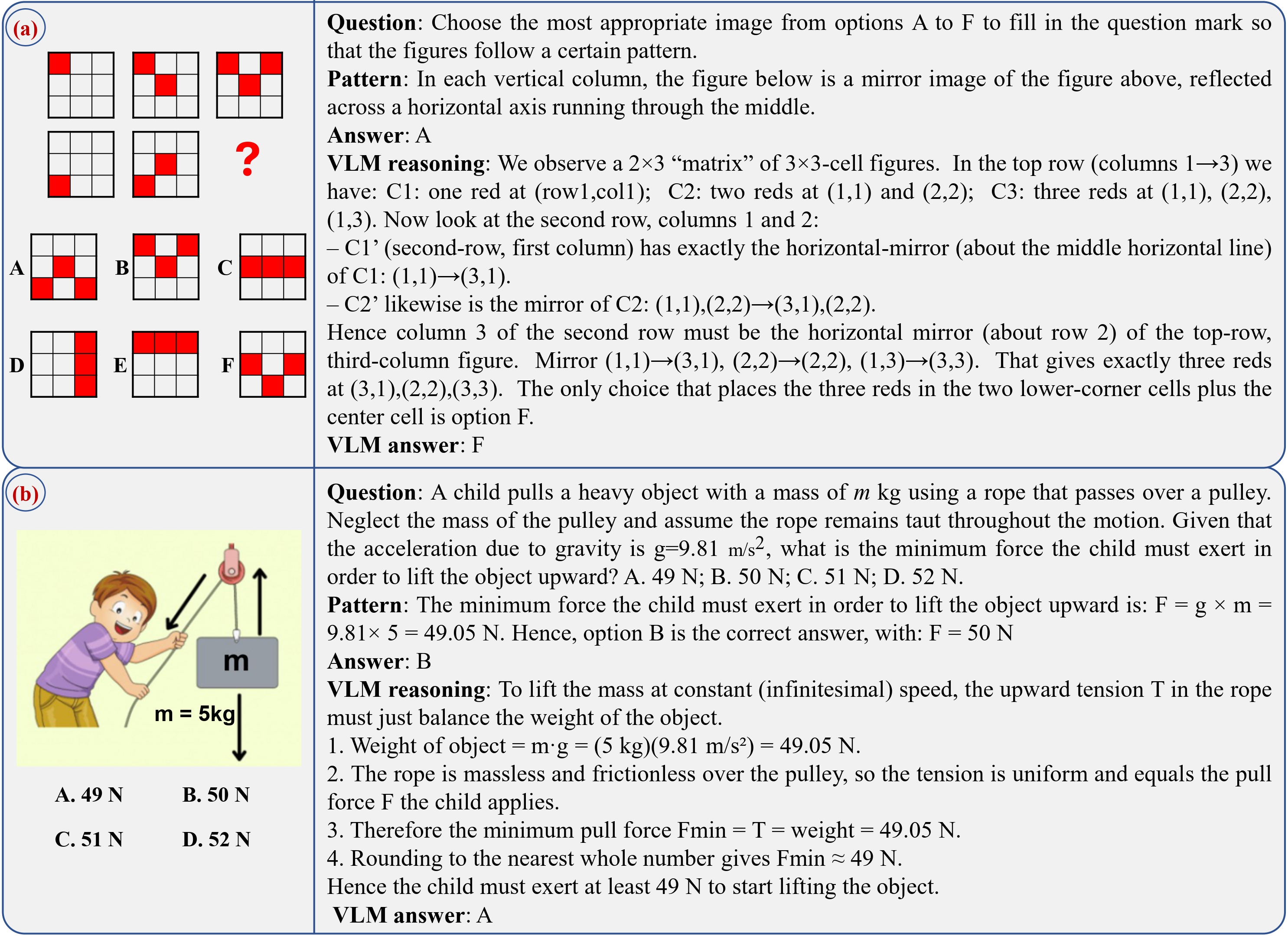

Dataset examples

Data Quality Control

- Uniqueness: Lexical overlap analysis to remove duplicates

- Standardization: Manual review to ensure consistency in format

- Visual emphasis: Questions are vision-centric to minimize reliance on language priors

- Legal compliance: All images are verified or newly created to avoid copyright issues

- Reduced data leakage: Human-generated content decreases the chance of pretraining contamination

Evaluation Methods

IQBench adopts a dual-metric evaluation framework to assess both the accuracy and reasoning quality of Vision-Language Models (VLMs):

1. Accuracy Score

- Measures if the final answer is correct (exact match).

- Applies to multiple-choice and open-ended questions.

- Score:

1(correct),0(incorrect).

2. Reasoning Score

- Evaluates how well the model’s explanation aligns with the expected reasoning path.

- Uses an LLM-as-judge approach (e.g.,

gpt-4o-mini) to compare model explanations with human-annotated patterns. - Score:

1(aligned),0(misaligned).

Human Evaluation

- Human judges assess reasoning quality for qualitative insights, complementing automated scores.

This combined approach ensures a more comprehensive evaluation of both answer accuracy and interpretability of reasoning.

📊 IQBench Evaluation Results

🔍 Reasoning Evaluation

| Model | MDRT | DRTF | 3D SPRT | VRTS | IVRT | Num. | FS | NS | Ana5 | Ana3 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|

gemini-2.5-flash |

0.60 | 0.78 | 0.22 | 0.72 | 0.78 | 0.72 | 0.54 | 0.94 | 0.14 | 0.42 | 0.586 |

gemini-2.0-flash |

0.50 | 0.52 | 0.16 | 0.74 | 0.74 | 0.28 | 0.32 | 0.58 | 0.16 | 0.08 | 0.408 |

claude-3.7-sonnet |

0.58 | 0.82 | 0.36 | 0.72 | 0.70 | 0.50 | 0.54 | 0.76 | 0.04 | 0.14 | 0.516 |

claude-3.5-sonnet |

0.60 | 0.64 | 0.16 | 0.74 | 0.72 | 0.16 | 0.28 | 0.52 | 0.12 | 0.06 | 0.400 |

gpt-4o |

0.60 | 0.44 | 0.56 | 0.78 | 0.80 | 0.30 | 0.68 | 0.44 | 0.04 | 0.02 | 0.466 |

o4-mini |

0.92 | 0.88 | 0.82 | 0.78 | 0.80 | 0.72 | 0.90 | 0.90 | 0.10 | 0.14 | 0.696 |

gpt-o3 |

0.70 | 0.88 | – | – | – | – | – | – | 0.12 | – | – |

✅ Accuracy Evaluation

| Model | MDRT | DRTF | 3D SPRT | VRTS | IVRT | Num. | FS | NS | Ana5 | Ana3 | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|

gemini-2.5-flash |

0.60 | 0.78 | 0.18 | 0.66 | 0.78 | 0.74 | 0.60 | 0.88 | 0.14 | 0.42 | 0.578 |

gemini-2.0-flash |

0.58 | 0.56 | 0.22 | 0.70 | 0.74 | 0.52 | 0.44 | 0.84 | 0.16 | 0.14 | 0.490 |

claude-3.7-sonnet |

0.64 | 0.90 | 0.40 | 0.72 | 0.68 | 0.46 | 0.66 | 0.82 | 0.04 | 0.16 | 0.548 |

claude-3.5-sonnet |

0.62 | 0.68 | 0.20 | 0.74 | 0.74 | 0.32 | 0.42 | 0.76 | 0.12 | 0.10 | 0.470 |

gpt-4o |

0.56 | 0.42 | 0.20 | 0.80 | 0.74 | 0.36 | 0.26 | 0.66 | 0.06 | 0.02 | 0.408 |

o4-mini |

0.72 | 0.86 | 0.34 | 0.66 | 0.76 | 0.82 | 0.60 | 0.94 | 0.02 | 0.14 | 0.615 |

gpt-o3 |

0.70 | 0.88 | – | – | – | – | – | – | 0.12 | – | – |